One Way Analysis of Variance #

What is one-way ANOVA? #

What is the hypothesis of one-way ANOVA #

What is the underlying model of one-way ANOVA #

A motivating example for one-way ANOVA. #

When do we want to use one-ANOVA?

When might we not want to use one-way ANOVA?

What’s the conceptual idea behind one-way ANOVA? #

- big picture idea

- show simulation

- perhaps reference distribution simulation

What are the steps to perform one-way ANOVA? #

- notation

- SS

- df

- MS

- F

- p-value

- ANOVA table for organizing

Why does ANOVA work? #

- a bit more on the conceptual side now that the steps are understood,

- E(MSerror) and E(MSTreat) = sigma^2.

- how the ratio of MS erro rnad mstreat follows an f distribution if the null is true

- how if null is not true E(mstreat) is inflated by the effects, give the formula.

How to perform one-way ANOVA in R #

Fitting model #

Diagnosing model adequacy and assuptions #

One-way ANOVA post hoc tests #

One you reject the hypothesis from one-way ANOVA, what do you do?

Follow up, or post hoc, tests.

The problem of multiple comparisons #

Family or Experiment-wise error rate

P(reject at least one of the H_0 among all the tests | all H_0 of tests are true)

Comparing pairs of treatment means #

Fisher’s procedure and LSD, Least Significant Difference #

Fisher’s procedure for post hoc tests is performing t-tests after the hypothesis that all group means are equal is rejected. In this sense it is “protected”. In practice, this does little to control the experiment-wise

\[t_0 = \frac{\bar{y}_{i.} - \bar{y}_{j.}}{\sqrt{MS_E (\frac{1}{n_i} + \frac{1}{n_j})}}\]If our alternative hypothesis is two sided ( \( H_A: \mu_i \neq \mu_j \) ) , then we would reject the null hypothesis if \( |t_0| > t_{\alpha/2, N-a} \) . That is, if our observed test statistic is larger than the t critical value, the smallest value that would lead us to reject the null hypothesis at significance level \(\alpha\) . Therefore, we would reject the null hypothesis if

\[|\bar{y}_{i.} - \bar{y}_{j.}| > t_0 \sqrt{MS_E (\frac{1}{n_i} + \frac{1}{n_j})}\]When the sample sizes of all the groups are the same then the LSD simplifies to \[|\bar{y}_{i.} - \bar{y}_{j.}| > t_0 \sqrt{\frac{ 2 MS_E}{n}}\]

Constructing a compact letter display with Fisher’s LSD #

The primary benefit of Fisher’s LSD is that, if the samples sizes of all groups are the same, it provides a kind of yardstick that we can use to quickly perform all pairwise comparisons in a small amount of space. Such a comparison is often called a “compact letter display” for reasons to be seen shortly.

To perform all pairwise comparisons using Fisher’s LSD, we rank the means of each treatment from smallest to largest. Then all means that are within the LSD of each other recieve the same letter, indicating that there is not clear statistical evidence that the population means of that treatment are different from one another. An example makes this more clear:

Suppose we find that the LSD is 15. That means that any sample means that are more than the least significant difference (LSD) of 15 are deemed “significantly different from one another”, in other words we have clear evidence their population means are different at level \(\alpha\) .

Suppose that we have 5 levels or treatments of our factor. The sample means are given in the table below.

| treatment | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| sample mean | 11 | 45 | 23 | 13 | 32 |

Next we rank the sample means:

| treatment | 1 | 4 | 3 | 5 | 4 |

|---|---|---|---|---|---|

| sample mean | 11 | 13 | 23 | 32 | 45 |

Then we check whether the difference between sample means is greater than the least significant difference. If a sample mean is not more than the LSD away from another sample mean, then they get assigned the same letter.

| treatment | 1 | 4 | 3 | 5 | 4 |

|---|---|---|---|---|---|

| sample mean | 11 | 13 | 23 | 32 | 45 |

| a | a | a | |||

| b | b | ||||

| c | c |

Usually the letters are put together:

| treatment | 1 | 4 | 3 | 5 | 4 |

|---|---|---|---|---|---|

| sample mean | 11 | 13 | 23 | 32 | 45 |

| a | a | a, b | b, c | c |

To interpret the above table, we look at what letters are assigned to a treatment and can quickly identify those that don’t share the same letters as having population means that we consider clearly different. For example, treatment 4 has a population mean that is clearly different from the population mean of treatments 1, 2, and 3. Treatment 5 has a population mean that is clearly different than the population means of treatments 1 and 4, but we don’t have sufficient evidence to say the population mean of treatment 5 is clearly different from the population mean of treatments 3 and 4. Be careful not to use false logic like “We have no clear evidence for a difference in population means of treatment 3 and 5, and we have no clear evidence for a difference in populations means of treatments 5 and 4, therefore we have no clear evidence for a difference in means of treatments 3 and 4.” Actually we do have clear evidence that the means of treatments 3 and 4 are different from one another.

One of the issues with compact letter displays is that they can often be misinterpreted as showing that two treatments that share the same letter have population means that are equal to one another. Be careful not to do this. Sharing a letter just means that we don’t have clear evidence the means are different.

What if the sample sizes differ?

Aside, why use the MSError instead of S_p^2 from just the two samples of interest? #

Tukey’s HSD, Honest Significant Difference #

also have the confidence intervals.

Dunnett’s procedure, compare treatment means to a control #

Which pairwise comparison method to use? #

Linear contrasts #

After we reject the null hypothesis for overall test in one-way ANOVA, we usually want to figure out how the population means of each level of the factor differ. Often that means performing pairwise comparisons using a procedure like Fisher’s LSD or Tukey’s HSD (see above).

For a factor with 5 levels and so 5 population means: \( \mu_1, \mu_2, \mu_3, \mu_4, \mu_5 \) , these pairwise comparisons amount to testing hypotheses like these:

\[H_0: \mu_i = \mu_j \text{ for } i \neq j\] vs. \[H_A: \mu_i \neq \mu_j \text{ for } i \neq j\]

Where i and j are the indicies of the factor levels, in this example 1 through 5.

These pairwise tests are extremely common and useful, but sometimees we want to compare means or more complex ways, for example, does the average of the means of two levels differ from the average of the means of two other levels. In other words:

\[H_0: \frac{\mu_1 + \mu_2}{2} = \frac{\mu_3 + \mu_4}{2}\] vs. \[H_A: \frac{\mu_1 + \mu_2}{2} \neq \frac{\mu_3 + \mu_4}{2}\]

At first glance, it might not make sense why we would be interested in such a comparison. The example below illustrates such a case. Linear contrasts are very general tool and they encompass many complex comparisons of means as well as the pairwise comparisons.

Motivating Example for Linear contrasts #

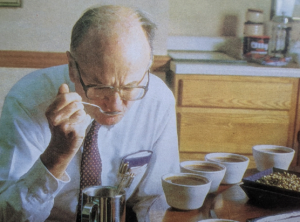

Strong coffee makes a strong mind

Total dissolved solids (TDS) is a measure of coffee brew strength. Angeloni and collegues were interested in the TDS of coffee brewed with two methods at two cold (for coffee) temperatures. The two methods they compared were: drip (water drips through a bed of grounds, 1 drop per 10 seconds) and immersion (grounds are immersed in water for 6 hours). The two temperatures were 5 and 22 degrees C. They brewed samples of coffee at each combination of method and temperature. They also compared these cold methods to a more typical hot french press brew. Below are the sample mean TDS at each recipe.

| Recipe | Method | Temperature | TDS (mg/mL) | standard deviation |

|---|---|---|---|---|

| 1 | Drip | 5 | 14.023 | 3.434 |

| 2 | Immersion | 5 | 18.421 | 0.686 |

| 3 | Drip | 22 | 18.004 | 4.765 |

| 4 | Immersion | 22 | 22.746 | 0.687 |

| 5 | Immersion | 98 | 27.351 | 3.711 |

It was not clear from the Angeloni paper what the sample size was for each recipe, so for our purposes here we will assume that n was 3 for each recipe.

We could perform pairwise comparisons of all 5 recipes, but there is structure to the recipes that more complex comparisions can highlight. For example, we might want to know if the coffee samples brewed at 5 degrees C have a true mean TDS that is different from those samples brewed at 22 degrees C. In other words, is the average of the true mean TDS of recipes 1 and 2 different than the average of the true mean TDS of recipes 3 and 4? Written as null and alternative hypotheses:

\[H_0: \frac{\mu_1 + \mu_2}{2} = \frac{\mu_3 + \mu_4}{2}\] vs. \[H_A: \frac{\mu_1 + \mu_2}{2} \neq \frac{\mu_4 + \mu_4}{2}\]

By moving all the terms to one side of the equation and distributing the factor of 1/2, we could rewrite these hypotheses as: \[H_0: \frac{1}{2}\mu_1 + \frac{1}{2}\mu_2 - \frac{1}{2}\mu_3 - \frac{1}{2}\mu_4 = 0\] vs. \[H_A: \frac{1}{2}\mu_1 + \frac{1}{2}\mu_2 - \frac{1}{2}\mu_3 - \frac{1}{2}\mu_4 \neq 0\]

These hypotheses are now in the form of a linear contrast. We define a linear contrast below.

Definition of a Linear Contrast #

Suppose a factor has a levels with population means \( \mu_1, \mu_2, ..., \mu_a \) . Let \( c_1, c_2, ..., c_a \) denote contrast coefficients such that \( \sum_{i=1}^a c_i = 0 \) . Then

\[\Gamma = \sum_i^a c_i\mu_i = c_1 \mu_1 + c_2 \mu_2 + \cdot\cdot\cdot + c_a\mu_a\]is a linear contrast.

Looking at the example above, we can see that the contrast coefficients are \( c_1 = 1/2, c_2 = 1/2, c_3 = -1/2, c_4 = -1/2 \text{ and } c_5 = 0 \) . Note that since the 5th recipe is not a part of the hypothesis, it’s coefficient is 0. Also note these sum to 0 and therefore are valid contrast coefficients.

Inference with a Linear Contrast #

Hypotheses #

Linear contrasts allow us to test a wide range of hypotheses using a simple and comprehensive structure. Below are the typical null and two-sided alternative hypotheses for linear contrasts.

\[H_0: \sum_i^a c_i\mu_i = \mu_C^0\] vs. \[H_A: \sum_i^a c_i\mu_i \neq \mu_C^0\]

where \( \mu_C^0 \) denotes the hypothesized value for the contrast. It is typically 0.

T-Test #

We can estimate the linear contrast, \( \Gamma = \sum_i^a c_i\mu_i \) , by plugging in the sample means as estimates of the population means.

\[C = \sum_i^a c_i\bar{y}_{i.} \]If the null hypothesis is true, then C follows a normal distribution with the expected value, \( E(C) = \mu_C^0 \) and the variance, \( V(C) \) ,

\[V(C) = \frac{\sigma^2}{n}\sum_{i=1}^a c_i^2\]Knowing the distribution of \(C\) , we can create a t statistic:

\[t_0 = \frac{\sum_i^a c_i\bar{y}_{i.} - \mu_C^0 }{\sqrt{\frac{MSError}{n}\sum_{i=1}^a c_i^2}}\] which if the null hypthesis is true, will follow a T distribution with \( N-a \) degrees of freedom.

From this t statistic we can calculate a p-value as we would for any other t test and we would reject the null hypothesis if \(|t_0| > t_{\alpha/2, N-a} \) .

Coffee Example Continued, T Test #

To test the hypothesis from above that the average of the true mean TDS recipes brewed at 5 degree C different than the average of the true mean TDS of recipes brewed at 22 degree C, we write the hypotheses as a linear contrast: \[H_0: \frac{1}{2}\mu_1 + \frac{1}{2}\mu_2 - \frac{1}{2}\mu_3 - \frac{1}{2}\mu_4 + 0\mu_5 = 0\]

vs. \[H_A: \frac{1}{2}\mu_1 + \frac{1}{2}\mu_2 - \frac{1}{2}\mu_3 - \frac{1}{2}\mu_4 + 0\mu_5 \neq 0\]

The contrast coefficients are \( c_1 = 1/2, c_2 = 1/2, c_3 = -1/2, c_4 = -1/2 \text{ and } c_5 = 0 \) . Using these coefficients and the sample means from above we get:

\[C = \sum_i^a c_i\bar{y}_{i.} = \frac{1}{2}(14.023) + \frac{1}{2}(18.421) - \frac{1}{2}(18.004) - \frac{1}{2}(22.746) + 0(27.351) = -4.153\]We do not have the raw data, nor the MSError, but we can find the MSError by averaging all of the sample variances. \[\text{MS}_E = \frac{(n_1 - 1) s_1^2 + (n_2 - 1) s_2^2 + \cdot\cdot\cdot + (n_a - 1) s_a^2}{N-a} \]

If all the sample sizes are equal this reduces to a simple average. \( \text{MS}_E = (3.434^2 + 0.686^2 + 4.765^2 + 0.687^2 + 3.711^2) / 5 = 9.842 \) . Notice that we include the sample variance from the 5th recipe. Even though its mean is not included in this contrast, it can provide information on \( \sigma^2 \) , granted that we trust our assumption that variance is the same across all recipes.

Our t statistic is therefore:

\[t_0 = \frac{-4.153 - 0 }{\sqrt{\frac{9.842}{3}(1)}} = -2.2929\] note, the sum of the contrast coefficients squared is 1.

Our p-value is two times the probability of observing a random variable that follows a T distribution (with 15 - 5 = 10 degrees of freedom) smaller than -2.2929. It’s a two sided alternative hypothesis so we multiple the tail by two. The p-value is 0.0448.

confidence intervals #

We can also construct confidence intervals for contrasts. A \(100(1-\alpha)\) % confidence interval for \(\Gamma = \sum_i^a c_i\mu_i \) is

\[\sum_i^a c_i\bar{y}_{i.} \pm t_{\alpha/2, N-a} \sqrt{\frac{\text{MS}_E}{n}\sum_{i=1}^a c_i^2}\]This is the usual format for any random variable that follows a normal distribution with unknown variance: the point estimate plus or minus a t critical value multiplied by the standard error of the estimate of the random variable.

An important fact to note is that while the conclusions of the T test for a linear contrast are not sensitive to scaling of contrast coefficients, the bounds of the confidence intervals are.

Coffee Example Continued, Confidence Intervals #

Plugging in the appropriate values for contrasts, sample means, MSError, and the t critical value ( \(2.228 = t_{0.025, 10}\) ), the 95% confidence interval for the linear contrast comparing TDS at different brewing temperatures is

\[-4.153 \pm 2.228 \sqrt{\frac{9.842}{3}(1)} = -4.153 \pm 4.036\]Confidence intervals depend on contrast coefficient scaling. #

If we had scaled all the contrasts by a factor of 2, \(\sum_{i=1}^a c_i^2\) would equal 4, not 1. This would widen our interval to \(-8.306 \pm 8.072 \) . On the other hand, our test statistic for the T test, \( t_0 \) , would remain the same, \( t_0 = -2.2929 \) .

The consequence of this is that contrasts will often not be written as fractions, to keep things simple, since the result of hypothesis tests won’t be changed. However, the values of the contrast coefficients need to be carefully considered and interpreted with constructing confidence intervals. One solution is to use standardarized contrasts to facilitate comparisons across contrasts:

\[c_i^* = \frac{c_i}{\sqrt{\frac{1}{n}\sum_{i=1}^a c_i^2}}\]this ensures that \( \sum_{i=1}^a c_i\bar{y}_{i.}\) has a variance of \( \sigma^2 \)

F test #

We can test the hypotheses:

\[H_0: \sum_i^a c_i\mu_i = 0\] vs. \[H_A: \sum_i^a c_i\mu_i \neq 0\]

where \mu_C^0 is set to 0 with an F test as well as a T test. Both tests give the same results, but as we’ll see, the F test provides a useful perspective on contrasts.

In a similar way that we can find the Sum of Squares due to our factor/treatment, we can find the Sum of Squares due to the contrast.

\[\text{SS}_C = \frac{\displaystyle \Big(\sum_{i=1}^a c_i \bar{y}_{i\cdot}\Big)^2}{\displaystyle \frac{1}{n}\sum_{i=1}^a c_i^2}\]The F statistic is defined as:

\[F = \frac{\text{SS}_C/1}{\text{MS}_E} = \frac{\text{MS}_C}{\text{MS}_E} \]There is 1 degree of freedom associated with the contrast, so SSContrast and MSContrast are the same. If the null hypothesis is true, this F test statistic follows and F distribution with 1 and N-a degrees of freedom.

F test for coffee brewing temperature example #

To the below hypotheses about differences in coffee strength due to temperature, we calcuate the SS_C, the f statistic, and then the p-value. \[H_0: \frac{1}{2}\mu_1 + \frac{1}{2}\mu_2 - \frac{1}{2}\mu_3 - \frac{1}{2}\mu_4 + 0\mu_5 = 0\]

vs. \[H_A: \frac{1}{2}\mu_1 + \frac{1}{2}\mu_2 - \frac{1}{2}\mu_3 - \frac{1}{2}\mu_4 + 0\mu_5 \neq 0\]

The SSContrast is \[\text{SS}_C = \frac{\displaystyle \Big(\frac{1}{2}(14.023) + \frac{1}{2}(18.421) - \frac{1}{2}(18.004) - \frac{1}{2}(22.746) + 0(27.351)\Big)^2}{\displaystyle \frac{1}{3} (\frac{1}{2}^2 + \frac{1}{2}^2 + (-\frac{1}{2})^2 + (-\frac{1}{2})^2 + 0^2)} = 51.74\]

The observed f statistic is: \[f = \frac{5.749/1}{9.842} = 5.257\]

The p-value is \(P(F_{1,10} > 5.257) = 0.0448 \) . This is the exact same p-value as from the T test. Indeed, the f statistic is the t statistic squared.

The T test and the F test give identical results, and it begs the question, “Why learn both?”. The answer lies in the potential to use the concept of SSContrast to partition the SSTreatment amongst a-1 orthogonal contrasts. We turn to that topic next.

Orthogonal contrasts #

After performing a one way ANOVA overall test, we might be interested in more than one follow up contrast. For example, we might want to compare the averages of the recipes with different temperatures (as we’ve been discussing), and also the averages of the recipes with different methods (excluding the hot brewed french press). We would therefore need two contrasts:

The first contrast, \(C_1\) , has coefficients: \(c_{11} = 1/2, c_{12} = 1/2, c_{13} = -1/2, c_{14} = -1/2, c_{15} = 0\) .

The second contrast, \(C_2\) has coefficients: \(c_{21} = 1/2, c_{22} = -1/2, c_{23} = 1/2, c_{24} = -1/2, c_{25} = 0\) ..

Here the first subscript of the contrast coefficients corresponds to the contrast number from j = 1, …, k. The second subscript corresponds to the factor level from i = 1, …, a.

A set of contrasts, C_j, for j = 1,2, …, k are said to be orthogonal if all their pairwise dot products are 0.

\(\sum_{i=1}^a c_{pi}c_{qi} = 0 \text{ for } p \neq q\)To show \( C_1 \) and \(C_2\) are orthogonal:

\((1/2)(1/2) + (1/2)(-1/2) + (-1/2)(1/2) + (-1/2)(-1/2) = \\ 1/4 - 1/4 - 1/4 + 1/4 = 0\)Orthogonal contrasts have many desirable properties that will be discussed more later. A set of \(a-1\) orthogonal contrasts will completely partition the SSTreatment, and their primary benefit is that as a set they are more easy to interpret than non orthogonal contrasts. In the extended example below we will use several orthogonal contrasts.

More examples #

encompass many possible comparisons, so understanding how to use linear contrasts can give a researcher the ability to ask more questions.

more examples, use orthogonal contrasts.